When to apply RAG vs Fine-Tuning: A practical guide

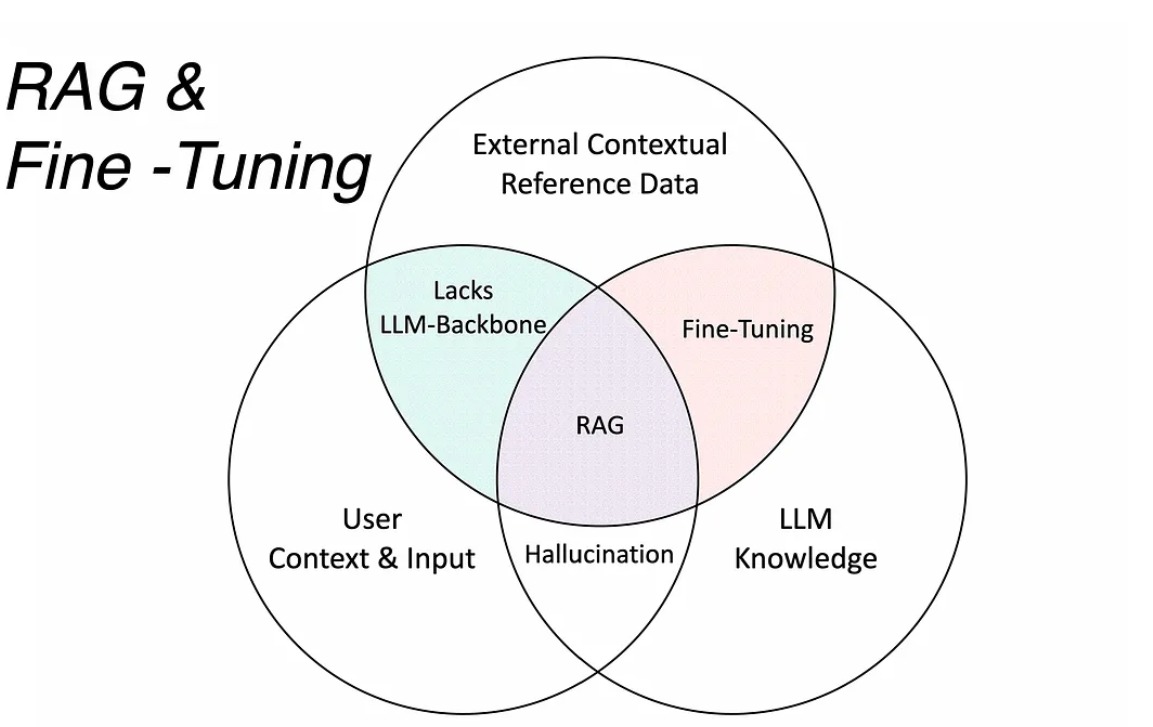

In the rapidly evolving landscape of AI implementation, two approaches have emerged as powerful tools for enhancing Large Language Models (LLMs): Retrieval Augmented Generation (RAG) and Fine-tuning. While both methods can improve model performance, they serve different purposes and come with distinct advantages and trade-offs. Understanding when to use each approach is crucial for successful AI implementation.

The importance of making the right architectural decisions in AI implementations cannot be overstated. Each use case demands its own unique approach to achieve quality results. Simply deploying a Large Language Model without proper expertise and careful consideration of implementation strategies often leads to suboptimal outcomes. The complexity of modern AI systems means that success depends not just on the power of the underlying models, but on how well they are integrated into a thoughtfully designed pipeline. Organizations must invest in understanding these different approaches and their applicability to specific use cases. This expertise allows them to build robust, effective solutions that truly address their unique challenges, rather than settling for generic implementations that may fall short of business requirements.

Understanding the Basics

Retrieval Augmented Generation (RAG) enhances LLMs by retrieving relevant knowledge from a database before generating text. Think of it as giving the model access to a specialized knowledge base that it can reference while responding. For example, a customer service AI could retrieve specific product documentation before answering customer queries.

Fine-tuning, on the other hand, involves retraining a pre-trained model on domain-specific data to adapt its behavior or knowledge. It’s like teaching an experienced professional new specialized skills for a specific role. For instance, training a general-purpose LLM to consistently format outputs in a company-specific way.

When to Use RAG

RAG excels in scenarios where current information and data flexibility are paramount. When your organization needs to work with frequently updated information, RAG provides the ability to modify the knowledge base without retraining the model. This makes it particularly valuable for handling proprietary data that changes often, such as product catalogs or company policies.

The approach also shines in situations requiring transparency and clear source attribution. Since RAG explicitly retrieves and uses specific pieces of information, you can trace exactly where each part of the response comes from. This transparency is invaluable for compliance requirements and building trust with end-users.

Another significant advantage of RAG is its effectiveness with limited training data. Unlike fine-tuning, which typically requires substantial amounts of high-quality training examples, RAG can work effectively with smaller datasets since it’s primarily focused on information retrieval rather than behavior modification.

When to Use Fine-tuning

Fine-tuning becomes the preferred approach when consistent style or formatting is crucial to your application. When you need a model to maintain a specific tone of voice or adhere to strict output formatting requirements, fine-tuning can embed these patterns deeply into the model’s behavior.

This approach is particularly powerful for complex instruction following. Through fine-tuning, you can teach a model to reliably execute detailed, multi-step processes without needing to spell out the entire procedure in each prompt. This can significantly reduce inference time and improve reliability for specialized tasks.

Performance optimization is another key scenario where fine-tuning shines. By embedding domain-specific knowledge and patterns directly into the model, you can achieve faster inference times and better handling of edge cases. This is especially valuable in production environments where response speed and consistency are critical.

Technical Implementation Details

The implementation of RAG requires careful attention to several key components. At its core, RAG depends on a well-designed vector database setup. The choice of vector database technology should align with your specific needs for scale, speed, and functionality. Beyond the database itself, you’ll need to develop robust document processing pipelines that handle chunking, embedding generation, and efficient indexing.

The retrieval strategy in RAG implementations deserves special consideration. A successful implementation often combines multiple retrieval methods, including semantic similarity search and keyword-based approaches. The architecture should include sophisticated context window management and relevance thresholds to ensure the most appropriate information is retrieved and utilized.

Fine-tuning implementation begins with meticulous data preparation. The quality of your training data directly impacts the success of fine-tuning. This involves not just cleaning and formatting the data, but also careful consideration of how to structure examples to teach the desired behavior. The training configuration itself requires thoughtful selection of hyperparameters and base models.

Deployment considerations for fine-tuned models include robust versioning strategies and monitoring systems. You’ll need to establish clear procedures for model updates and rollbacks, along with comprehensive testing protocols to ensure consistent performance.

Evaluation Metrics and Testing

Effective evaluation of RAG systems requires a multi-faceted approach to measurement. When assessing retrieval quality, metrics like Mean Reciprocal Rank (MRR) and Normalized Discounted Cumulative Gain (NDCG) provide insights into how well the system is finding relevant information. Beyond retrieval, you’ll want to evaluate response quality through measures of relevance, consistency, and appropriate use of retrieved context.

For fine-tuned models, evaluation starts during the training process with careful monitoring of learning curves and model convergence. However, the real test comes in production, where you’ll need to measure both technical metrics like inference latency and business metrics like task completion rates and user satisfaction.

Comparative testing between approaches should involve carefully designed A/B tests with clear evaluation rubrics. This testing should encompass not just technical performance but also business impact metrics that align with your organizational goals. Consider factors like user satisfaction, task completion rates, and overall cost-effectiveness.

The Power of Combining Approaches

Many successful implementations actually combine both RAG and fine-tuning to leverage their complementary strengths. You might fine-tune a model for consistent behavior and formatting while using RAG to provide it with up-to-date information. This hybrid approach can offer the best of both worlds: the reliability and efficiency of fine-tuning with the flexibility and current knowledge of RAG.

Looking Forward

The field of AI is rapidly evolving, and both RAG and fine-tuning continue to advance. The key to success lies not in choosing one approach over the other, but in understanding how each can serve your specific needs. Begin by clearly defining your requirements and success metrics, then design an implementation strategy that might incorporate either or both approaches as needed.

Consider starting with a small-scale pilot to validate your approach before scaling up. Monitor performance carefully and be prepared to adjust your strategy based on real-world results. Remember that successful implementation often depends more on proper execution and ongoing refinement than on the initial choice of approach.